DeepAR and Amazon IVS Android integration

Prerequisites

See the prerequisite here.

Sample app

We will showcase the integration with a simple Android app that shows a camera preview and lets you add fun AR masks and filters. To follow along with this tutorial clone this GitHub repo and open it in the Android Studio.

Install DeepAR SDK

Download the Android DeepAR SDK from the DeepAR developer portal here and paste the downloaded

deepar.aarfile in thedeepardirectory of the sample app.Create a project with a free plan on the DeepAR developer portal here.

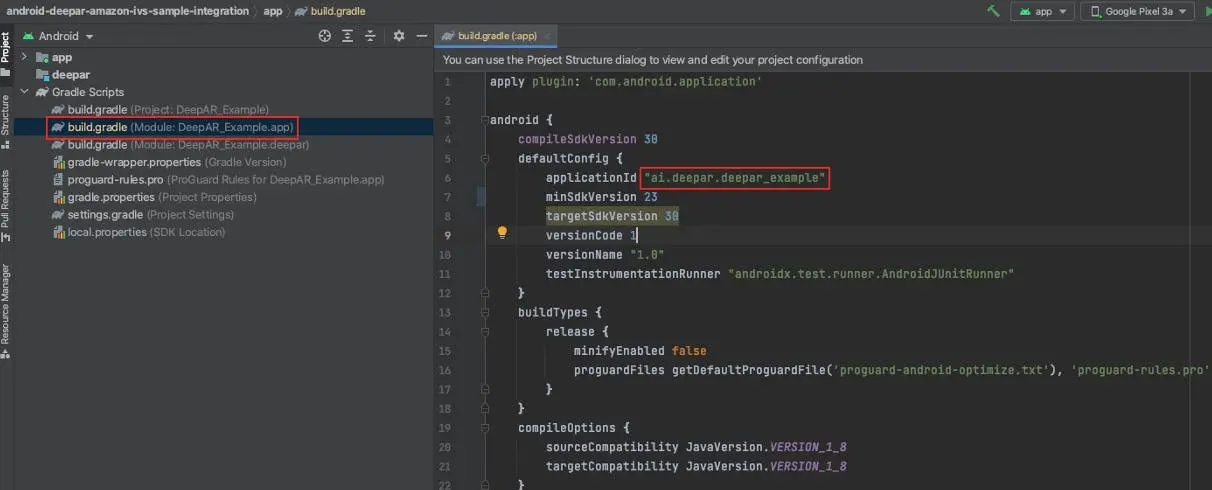

Add an Android app in the DeepAR project you just created. Use the application id from the sample app (you will find it in the

build.gradlefile).

Copy the generated license key from the DeepAR developer portal and paste it into the sample app in the

MainActivity.javainstead ofyour_license_key_here.Now run Gradle Sync in the Android Studio

Setup Amazon IVS channel

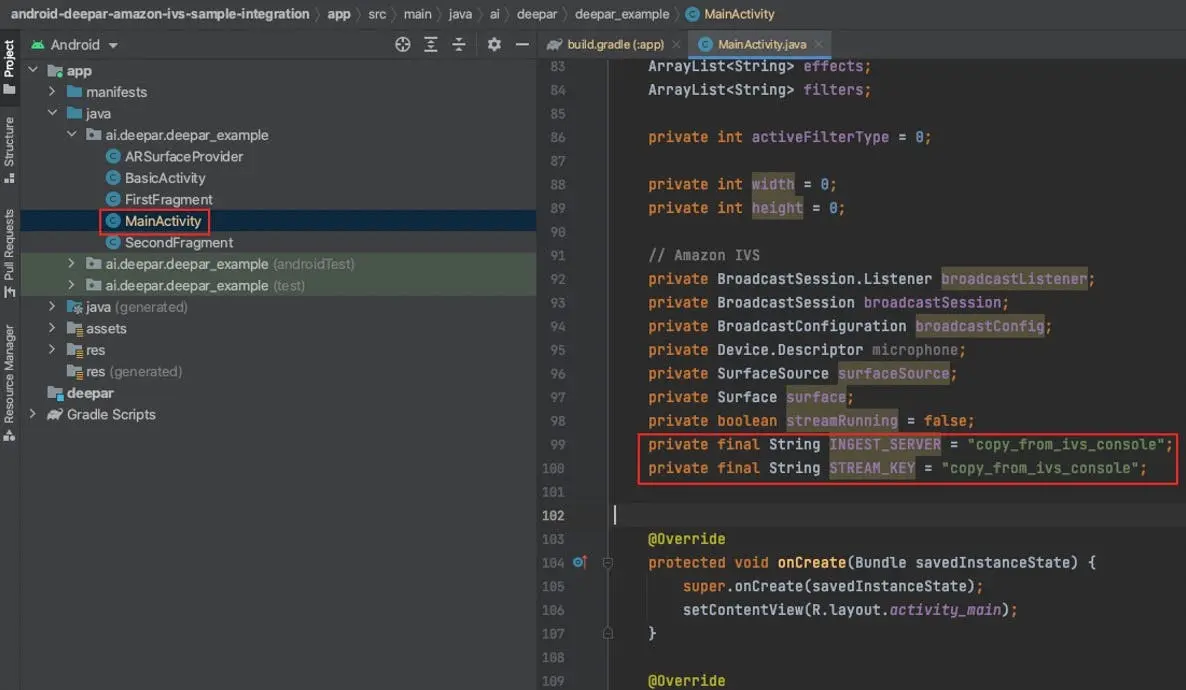

Copy the Ingest server and Stream key from the Amazon IVS console, and paste them in the MainActivity.java.

Integration steps

The Amazon IVS broadcast SDK provides an API for controlling and capturing device camera images for streaming. To integrate with DeepAR, you will need to provide application logic for controlling the device camera and use the DeepAR SDK to process the images (add AR filters). Then, pass the processed images to the IVS Broadcast SDK via CustomImageSource for streaming.

All the IVS integration logic is contained in setupIVS method. For the purpose of this tutorial, we will assume that the client wants to stream the preview of 1280x720 resolution.

Create the Broadcast Session

We will create a broadcast session with a custom slot that uses a custom image source and a default IVS microphone device.

First, create a broadcast listener for logging the IVS SDK state changes and errors.

broadcastListener = new BroadcastSession.Listener() {

@Override

public void onStateChanged(@NonNull BroadcastSession.State state) {

android.util.Log.d("Amazon IVS", "State = " + state);

}

@Override

public void onError(@NonNull BroadcastException e) {

android.util.Log.d("Amazon IVS", "Exception = " + e);

}

};

Create a broadcast configuration with a custom slot that uses custom image source. For the audio input of the slot, use the default microphone device so that the IVS SDK handles the sound capture automatically.

int streamingWidth = 720;

int streamingHeight = 1280;

if(getResources().getConfiguration().orientation == Configuration.ORIENTATION_LANDSCAPE) {

streamingWidth = 1280;

streamingHeight = 720;

}

int finalStreamingWidth = streamingWidth;

int finalStreamingHeight = streamingHeight;

broadcastConfig = BroadcastConfiguration.with($ -> {

$.video.setSize(finalStreamingWidth, finalStreamingHeight);

$.mixer.slots = new BroadcastConfiguration.Mixer.Slot[] {

BroadcastConfiguration.Mixer.Slot.with(slot -> {

slot.setPreferredVideoInput(Device.Descriptor.DeviceType.USER_IMAGE);

slot.setPreferredAudioInput(Device.Descriptor.DeviceType.MICROPHONE);

slot.setName("custom");

return slot;

})

};

return $;

});

Create a broadcast session.

broadcastSession = new BroadcastSession(this, broadcastListener, broadcastConfig, Presets.Devices.MICROPHONE(this));

Custom image source

Now create a custom image input source for Broadcast IVS SDK. Set its resolution and rotation, and get the Android Surface from the custom image source.

surfaceSource = broadcastSession.createImageInputSource();

if(!surfaceSource.isValid()) {

throw new IllegalStateException("Amazon IVS surface not valid!");

}

surfaceSource.setSize(streamingWidth, streamingHeight);

surfaceSource.setRotation(ImageDevice.Rotation.ROTATION_0);

surface = surfaceSource.getInputSurface();

Bind the custom image source to the custom slot we defined.

boolean success = broadcastSession.getMixer().bind(surfaceSource, "custom");

Set DeepAR SDK to render its result to the custom surface,

deepAR.setRenderSurface(surface, streamingWidth, streamingHeight);

Preview the stream on the screen

Get the final preview view which shows what the Broadcast IVS SDK will stream and present it on a screen.

TextureView view = broadcastSession.getPreviewView(BroadcastConfiguration.AspectMode.FIT);

ConstraintLayout layout = (ConstraintLayout) findViewById(R.id.rootLayout);

layout.addView(view, 0);

Start the broadcast session streaming

broadcastSession.start(INGEST_SERVER, STREAM_KEY);

Run the app

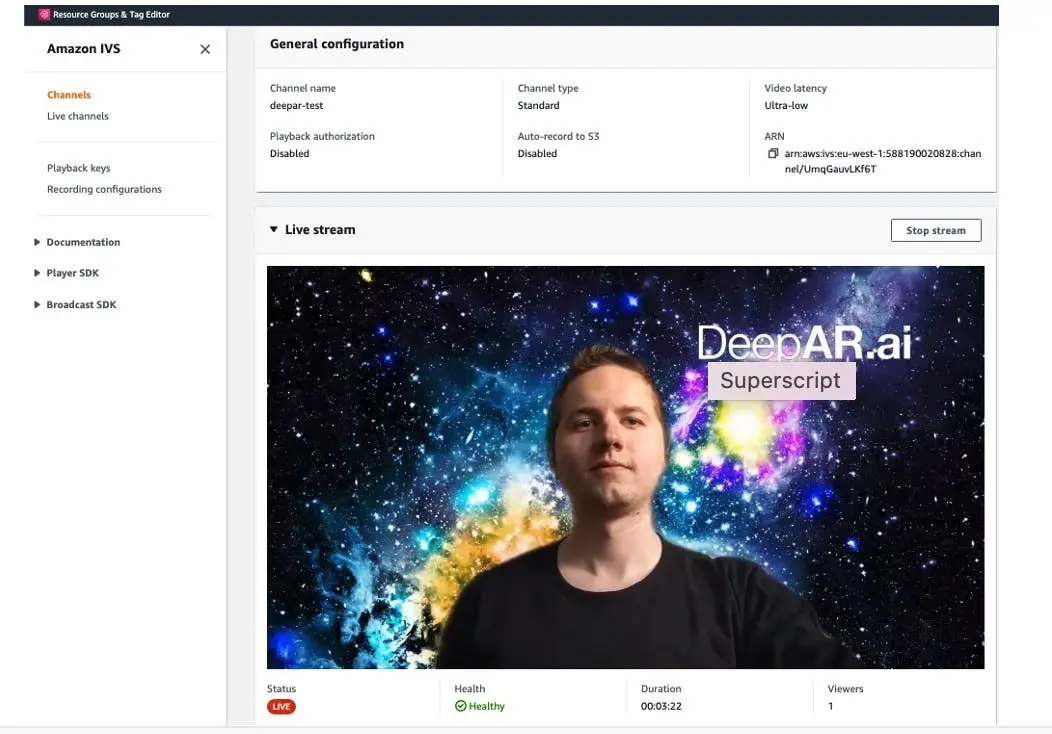

Now run the app on the device. You should see a camera preview. With left and right buttons, you can change the AR masks and filters.

Open the channel you created in the Amazon IVS console and open the live stream tab, then you should be streaming the video augmented with AR effects.

Additional info

Feeding camera frames to DeepAR

In the sample app, we use a standard Android CameraX implementation to:

- Start and handle the camera.

- Feed the camera frames to DeepAR via

receiveFramemethod.

Find the implementation details in the setupCamera method.

AR filters

AR filters are represented by effect files in DeepAR. You can load them to preview different AR filters.

Places you can get DeepAR effects:

- Download a free filter pack.

- Visit DeepAR asset store

- Create your own filters with DeepAR Studio.

Load an effect using the switchEffect method.

DeepAR Android SDK supports:

- Face filters and masks.

- Background replacement.

- Background blur.

- Emotion detection.

- AR mini-games.